MIT.nano Immersion Lab Gaming Program awards 2020 seed grants

MIT.nano has announced its second annual seed grants to support hardware and software research related to sensors, 3D/4D interaction and analysis, augmented and virtual reality (AR/VR), and gaming. The grants are awarded through the MIT.nano Immersion Lab Gaming Program, a four-year collaboration between MIT.nano and video game development company NCSOFT, a founding member of the MIT.nano Consortium.

“We are thrilled to award seven grants this year in support of research that will shape how people interact with the digital world and each other,” says MIT.nano Associate Director Brian W. Anthony. “The MIT.nano Immersion Lab Gaming Program encourages cross-collaboration between disciplines. Together, musicians and engineers, performing artists and data scientists will work to change the way humans think, study, interact, and play with data and information.”

The MIT.nano Immersion Lab is a new, two-story immersive space dedicated to visualizing, understanding, and interacting with large data or synthetic environments, and to measuring human-scale motions and maps of the world. Outfitted with equipment and software tools for motion capture, photogrammetry, and visualization, the facility is available for use by researchers and educators interested in using and creating new experiences, including the seed grant projects.

This year, the following seven projects have been selected to receive seed grants.

Mohammad Alizadeh: a neural-enhanced teleconferencing system

Seeking to improve teleconferencing systems that often suffer from poor quality due to network congestion, Department of Electrical Engineering and Computer Science (EECS) Associate Professor Mohammad Alizadeh proposes using artificial intelligence to create a neural-enhanced system.

This project, co-investigated by EECS professors Hari Balakrishnan, Fredo Durand, and William T. Freeman, uses person-specific face image generation models to handle deteriorated network conditions. Before a session, participants exchange their personalized generative models. If the network is congested during the session, clients use these models to reconstruct a high-quality video of each person from a low bit-rate video encoding or just their audio.

The team believes that, if successful, this research could help solve the major challenge of network bottlenecks that negatively impact remote teaching and learning, virtual reality experiences, and online multiplayer games.

Luca Daniel: dance-inspired models for representing intent

Dancers have an amazing ability to represent intent and emotions very clearly and powerfully using their bodies. EECS Professor Luca Daniel, along with mechanical engineering Research Scientist Micha Feigin-Almon, aim to study and generate a modeling framework explaining and capturing how dancers communicate intent and emotions through their movements.

This is the second NCSOFT seed grant awarded to Daniel and Feigin, who received funding in the 2019 inaugural round. Over the past year, they have used the motion capture equipment and software tools of the Immersion Lab to study how the movements of trained dancers differ from those of untrained individuals. The research indicates that trained dancers are producing elegant movements by efficiently storing and retrieving elastic energy from their muscles and connective tissue.

Daniel and Feigin are now formalizing their stretch-stabilization model of human movement, which has broad applicability in the fields of gaming, health care, robotics, and neuroscience. Of particular interest is generating elegant, realistic movement trajectories for virtual reality characters.

Frédo Durand: inverse rendering for photorealistic computer graphics and digital avatars

The field of computer graphics has created advanced algorithms to simulate images, given a 3D model of a scene. However, the realism of the final image is only as good as the input model, and the creation of sophisticated 3D scenes remains a huge challenge, especially in the case of digital humans.

Frédo Durand, the Amar Bose Professor of Computing in EECS, strives to improve 3D models to satisfy all lighting phenomena and faithfully reproduce real scenes and humans by developing inverse rendering techniques to generate photorealistic 3D models from input photographs. The end result could help create compelling digital avatars for gaming or video conferencing, reproduce scenes for telepresence, or create rich realistic environments for video games.

Jeehwan Kim: electronic skin-based long-term imperceptible and controller-free AR/VR motion tracker

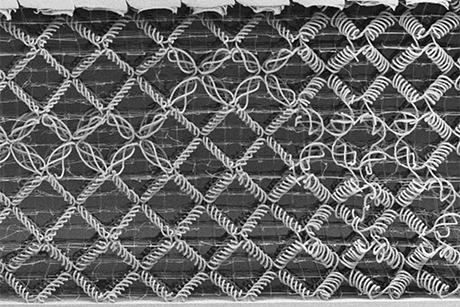

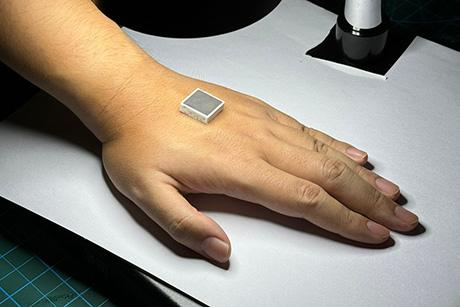

The most widely used input devices for AR/VR interactions are handheld controllers, which are difficult for new users to manipulate and inconvenient to hold, interrupting immersive experiences. Associate professor of mechanical engineering Jeehwan Kim proposes electronic skin (e-skin)-based human motion tracking hardware that can imperceptibly adhere to human skin and accurately detect human motions using skin-conformable optical markers and strain sensors.

Kim’s e-skin would enable detection and input of very subtle human movements, helping to improve the accuracy of the AR/VR experience and facilitate deeper user immersion. Additionally, the integration of LED markers, strain sensors, and EP sensors on the e-skin will combine to provide tracking of large range of motions, ranging from the movements of the joints to those of skin surfaces, as well as emotional changes.

Will Oliver: Qubit Arcade

Qubit Arcade, MIT’s VR quantum computing experience, teaches core principles of quantum computing via a virtual reality demonstration. Users can create Bloch spheres, control qubit states, measure results, and compose quantum circuits in a 3D representation, with a small selection of quantum gates.

In this next phase, EECS Associate Professor Will Oliver, with co-investigators MIT Open Learning Media Development Director Chris Boebel and virtual reality developer Luis Zanforlin, aims to turn Qubit Arcade into a gamified multiplayer educational tool that can be used in a classroom setting or at home, on the Oculus Quest platform. Their project includes adding more quantum gates to the circuit builder, and model quantum parallelism and interference — fundamental characteristics of quantum algorithms.

The team believes learnings from the educational application of Qubit Arcade have the potential to be applied to a variety of other subjects involving abstract visualizations, mathematical modeling, and circuit design.

Jay Scheib: augmenting opera: “Parsifal”

Music and Theater Arts Professor Jay Scheib’s work focuses on make opera and theater a more immersive event by bringing live cinematic technology and techniques into theatrical forms. In his productions, the audience experiences two layers of reality — the making of the performance and the performance itself, through live production and simultaneous broadcast.

Scheib’s current project is to create a production using AR techniques that would make use of existing extended reality (XR) technology, such as live video effects and live special effects processed in real time and projected back onto a surface or into headsets. He intends to develop an XR prototype for an augmented live performance of Richard Wagner’s opera “Parsifal” through the use of game engines for real-time visual effects processing in live performance environments.

Justin Solomon: AI for designing usable virtual assets

Virtual worlds, video games, and other 3D digital environments are built on huge collections of assets — 3D models of the objects, scenery, and characters that populate each scene. Artists and game designers use specialized, difficult-to-use modeling tools to design these assets, while non-experts are confined to rudimentary mix-and-match interfaces combining simplistic pre-defined pieces.

EECS Associate Professor Justin Solomon seeks to democratize content creation in virtual worlds by developing AI tools for designing virtual assets. In this project, Solomon explores designing algorithms that learn from existing datasets of expert-created 3D models to assist non-expert users in contributing objects to 3D environments. He envisions a flexible toolbox for producing assets from high-level user guidance, with varying part structure and detail.