Sensing the world around us

“Sensing is all around you,” said MIT.nano Associate Director Brian W. Anthony at Ambient Sensing, a half-day symposium presented in May by the MIT.nano Immersion Lab. Featuring MIT faculty and researchers from multiple disciplines, the event highlighted sensing technologies deployed everywhere from beneath the Earth’s surface to high into the exosphere.

Brent Minchew, assistant professor in the Department of Earth, Atmospheric and Planetary Sciences (EAPS), kicked off the symposium with a presentation on using remote sensing to understand the flow, deformation, and fracture of glacier ice, and how that is contributing to sea level rise. “There’s this fantastic separation of scales,” said Minchew. “We’re taking observations collected from satellites that are flying 700 kilometers above the surface, and we’re using the data that’s collected there to infer what’s happening at the atomic scale within the ice, which is magnificent.”

Minchew’s group is working with other researchers at MIT to build a drone capable of flying for three to four months over the polar regions, filling critical gaps in earth observations. “It’s going to give us this radical improvement over current technology and our observational capacity.”

Also using satellites, EAPS postdoc Qindan Zhu combines machine learning with observational inputs from remote sensing to study ozone pollution over North American cities. Zhu explained that, based on a decade worth of data, controlling nitrogen oxides emissions will be the most effective way to regulate ozone pollution in these urban areas. Both Zhu’s and Minchew’s presentations highlighted the important role ambient sensors play in learning more about Earth’s changing climate.

Transitioning from air to sea, Michael Benjamin, principal research scientist in the Department of Mechanical Engineering, spoke about his work on robotic marine vehicles to explore and monitor the ocean and coastal marine environments. “Robotic platforms as remote sensors have the ability to sense in places that are too dangerous, boring, or costly for crewed vessels,” explained Benjamin. At the MIT Marine Autonomy Lab, researchers are designing underwater surface robots, autonomous sailing vessels, and an amphibious surf zone robot.

Sensing is a huge part of marine robotics, said Benjamin. “Without sensors, robots wouldn’t be able to know where they are, they couldn’t avoid hidden things, they couldn’t collect information.”

Fadel Adib, associate professor in the Program in Media Arts & Sciences and the Department of Electrical Engineering & Computer Science (EECS), is also working on sensing underwater. “Battery life of underwater sensors is extremely limited,” explained Adib. “It is very difficult to recharge the battery of an ocean sensor once it’s been deployed.”

His research group built an underwater sensor that reflects acoustic signals rather than needing to generate its own, requiring much less power. They also developed a battery-free, wireless underwater camera that can capture images continuously and over a long period of time. Adib spoke about potential applications for underwater ambient sensing — climate studies, discovery of new ocean species, monitoring aquaculture farms to support food security, and even beyond the ocean, in outer space. “As you can imagine, it’s even more difficult to replace a sensor’s battery once you’ve shipped it on a space mission,” he said.

Originally working in the underwater sensing world, James Kinsey, CEO of Humatics, is applying his knowledge of ocean sensors to two different markets: public transit and automotive manufacturing. “All of that sensor data in the ocean — the value is when you can geolocate it,” explained Kinsey. “The more precisely and accurately you know that, you can begin to paint that 3D space.” Kinsey spoke about automating vehicle assembly lines with millimeter precision, allowing for the use of robotic arms. For subway trains, he highlighted the benefits of sensing systems to better know a train’s position, as well as to improve rider and worker safety by increasing situational awareness. “Precise positioning transforms the world,” he said.

At the intersection of electrical engineering, communications, and imaging, EECS Associate Professor Ruonan Han introduced his research on sensing through semiconductor chips that operate at terahertz frequencies. Using these terahertz chips, Han’s research group has demonstrated high-angular-resolution 3D imaging without mechanical scanning. They’re working on electronic nodes for gas sensing, precision timing, and miniaturizing tags and sensors.

In two Q&A panels led by Anthony, the presenters discussed how sensing technologies interface with the world, highlighting challenges in hardware design, manufacturing, packaging, reducing cost, and producing at scale. On the topic of data visualization, they agreed on a need for hardware and software technologies to interact with and assimilate data in faster, more immersive ways.

Ambient Sensing was broadcast live from the MIT.nano Immersion Lab. This unique research space, located on the third floor of MIT.nano, provides an environment to connect the physical to the digital — visualizing data, prototyping advanced tools for augmented and virtual reality (AR/VR), and developing new software and hardware concepts for immersive experiences.

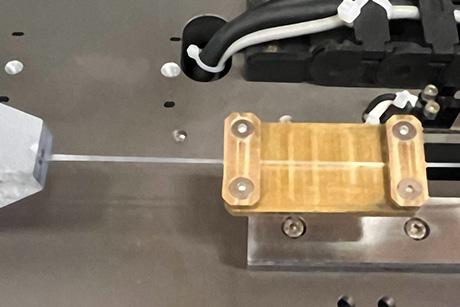

To showcase current work being done in the Immersion Lab, retired MIT fencing coach Robert Hupp joined Anthony and research scientist Praneeth Namburi for a live demonstration of immersive athlete-training technology. Using wireless sensors on the fencing épée paired with OptiTrack motion-capture sensors along the room’s perimeter, a novice fencer wearing a motion-capture suit and an AR headset faced a virtual opponent while Namburi tracked the fencer’s stance on a computer. Hupp was able to show the fencer how to improve his movements with this real-time data.

“This event showcased the capabilities of the Immersion Lab, and the work being done on sensing — including sensors, data analytics, and data visualization — across MIT,” says Anthony. “Many of our speakers talked about collaboration and the importance of bringing multiple fields together to advance ambient sensing and data collection to solve societal challenges. I look forward to welcome more academic and industry researchers into the Immersion Lab to support their work with our advanced hardware and software technologies.”