Smart laser cutter system detects different materials

With the addition of computers, laser cutters have rapidly become a relatively simple and powerful tool, with software controlling shiny machinery that can chop metals, woods, papers, and plastics. While this curious amalgam of materials feels encompassing, users still face difficulties distinguishing between stockpiles of visually similar materials, where the wrong stuff can make gooey messes, give off horrendous odors, or worse, spew out harmful chemicals.

Addressing what might not be totally apparent to the naked eye, scientists from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) came up with “SensiCut,” a smart material-sensing platform for laser cutters. In contrast to conventional, camera-based approaches that can easily misidentify materials, SensiCut uses a more nuanced fusion. It identifies materials using deep learning and an optical method called “speckle sensing,” a technique that uses a laser to sense a surface’s microstructure, enabled by just one image-sensing add-on.

A little assistance from SensiCut could go a long way — it could potentially protect users from hazardous waste, provide material-specific knowledge, suggest subtle cutting adjustments for better results, and even engrave various items like garments or phone cases that consist of multiple materials.

“By augmenting standard laser cutters with lensless image sensors, we can easily identify visually similar materials commonly found in workshops and reduce overall waste,” says Mustafa Doga Dogan, PhD candidate at MIT CSAIL. “We do this by leveraging a material’s micron-level surface structure, which is a unique characteristic even when visually similar to another type. Without that, you’d likely have to make an educated guess on the correct material name from a large database.”

Beyond using cameras, sticker tags (like QR codes) have also been used on individual sheets to identify them. Which seems straightforward, however, during laser cutting, if the code is cut off from the main sheet, it can’t be identified for future uses. Also, if an incorrect tag is attached, the laser cutter will assume the wrong material type.

To successfully play a round of “what material is this,” the team trained SensiCut’s deep neural network on images of 30 different material types of over 38,000 images, where it could then differentiate between things like acrylic, foamboard, and styrene, and even provide further guidance on power and speed settings.

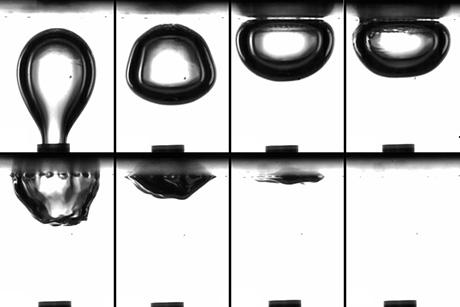

In one experiment, the team decided to build a face shield, which would require distinguishing between transparent materials from a workshop. The user would first select a design file in the interface, and then use the “pinpoint” function to get the laser moving to identify the material type at a point on the sheet. The laser interacts with the very tiny features of the surface and the rays are reflected off it, arriving at the pixels of the image sensor and producing a unique 2-D image. The system could then alert or flag the user that their sheet is polycarbonate, which means potentially highly toxic flames if cut by a laser.

The speckle imaging technique was used inside a laser cutter, with low-cost, off-the shelf-components, like a Raspberry Pi Zero microprocessor board. To make it compact, the team designed and 3-D printed a lightweight mechanical housing.

Beyond laser cutters, the team envisions a future where SensiCut’s sensing technology could eventually be integrated into other fabrication tools like 3-D printers. To capture additional nuances, they also plan to extend the system by adding thickness detection, a pertinent variable in material makeup.

Dogan wrote the paper alongside undergraduate researchers Steven Acevedo Colon and Varnika Sinha in MIT's Department of Electrical Engineering and Computer Science, Associate Professor Kaan Akşit of University College London, and MIT Professor Stefanie Mueller.

The team will present their work at the ACM Symposium on User Interface Software and Technology (UIST) in October. The work was supported by the NSF Award 1716413, the MIT Portugal Initiative, and the MIT Mechanical Engineering MathWorks Seed Fund Program.